Solving Real World Problems in the Public Service with AI

ETHOS Issue 27, January 2025

The current artificial intelligence (AI) surge is not unlike the early dot-com boom of the 1990s—ripe with promise but also peril. To properly harness this technological watershed, the Public Service must adopt, adapt and apply it to deliver meaningful solutions that address real-world problems faced by the public, and by our fellow public officers.

AI tools are still evolving. To succeed, we must experiment with them, but it is not enough to do so in siloes. We must co-create our solutions together, matching technical expertise with ground knowledge of the challenges we face.

Working Together on Real-World Use Cases

To identify and address real-world problems, the Government Technology Agency of Singapore (GovTech) has been deploying our officers to public agencies to work alongside their peers who deal with these challenges daily in the course of work. Apart from the technical expertise our personnel can provide, these joint teams can also tap on the larger pool of resources back in GovTech where needed.

Since 2023, such deployments have helped 13 agencies on their problem use cases, resulting in 21 AI-based experiments, including one deployed at the whole-of-government (WOG) level.

These use cases point to three major areas in which AI approaches have been helpful to the Public Service.

1. Enhancing Decision Making through Advanced Analytics

Officers want to make better sense of the vast quantities of unstructured data they collect to support informed decision making. Such problems benefit from approaches that leverage Large Language Models (LLMs), machine learning algorithms and natural language processing to automate and enhance the extraction of meaningful insights from large volumes of text data.

Ministry of Home Affairs, Cyber Security Agency, and GovTech: Recursive Machine-Learning Site Evaluator (rMSE)

To combat scams, enforcement agencies and other authorities need to assess more than 100,000 potential scam websites each day. The rMSE system leverages advancements in Generative AI (gen AI) and Computer Vision to build state-of-the-art machine-learning models that classify and evaluate potential scam sites by their technical attributes. By fine-tuning the model with local scam data, rMSE can classify sites more accurately (5x less error and 9x more scam sites flagged) than models based on just a global dataset. The model classifies sites in mere seconds, substantially speeding and scaling up anti-scam efforts.

SkillsFuture Singapore (SSG): Automating CRM analytics with Agentic AI and GraphRAG

SSG sought a more efficient and scalable solution to analyse their customer relationship management (CRM) data. A proof-of-concept trial showed that Agentic AI could automate and enhance the complex insights derivation workflow, while centrality and node similarity could be used to identify clusters of popular topics that recur across cases and to highlight interventions needed. Using GraphRAG, a method to enhance the performance of LLMs with external data, improved the system's ability to correctly identify and connect related topics over time. This made it easier for officers to retrieve accurate and concise information with natural language.

Given the large volume of cases, and the time-consuming nature of existing manual processes, SSG could only conduct their analysis on a quarterly basis. The new AI Agents and GraphRAG has transformed a resource-intensive analysis into an efficient, automated workflow. SSG can now process their approximately 30,000 quarterly cases 62.5x faster. The quality of CRM analysis has also markedly improved, enabling better evidence-based Business Process Redesign. SSG plans to develop this solution into an official tool for internal use, and may run this automated analysis on a higher frequency, for more timely interventions.

2. Driving Operational Efficiency

Operational efficiency is a key area that has seen significant strides from deploying AI. Our projects in this category aim to streamline processes and reduce the cognitive load on our officers, freeing them to focus on more strategic tasks.

Ministry of Social and Family Development (MSF): Social Report Companion

With increasing caseloads, case workers from MSF spend significant time and effort on report writing. The quality of these reports varies across case workers, which can impact the effectiveness of interventions.

After spending about a week with MSF officers, our team came up with an innovative solution: the Social Report Companion (SRC). More than just a drafting tool, SRC uses advanced language learning models to create an Assessment Guide for each type of assessment, ensuring consistency and accuracy across reports. SRC refines users' short notes based on this guide as well as a set of Gold Standard notes, leading to a high-quality, well-structured report. Since the nature of case work involves sensitive client data, SRC includes features to detect and redact personally identifiable information (PII).

SRC reduces time spent on report writing, allowing caseworkers to handle more cases and focus on uplifting clients. Since launching in October 2023, SRC has helped generate over 4,693 sections of the case reports, saving MSF officers 163 hours in writing.

SRC has been recognised internally within MSF. It won the Exemplary Innovator Award under the IDEAL Awards 2024, a testament to the hard work and dedication of everyone involved. More importantly, the success of the SRC has inspired other divisions within MSF and related agencies to explore the potential of gen AI in social work.

3. Providing Support and Assistance

Efficient support and assistance systems are crucial for managing and responding to public enquiries well. In the work of government, where public trust and satisfaction are paramount, such systems play a vital role in ensuring effective communication and service delivery. The goal is to reduce response times, increase the accuracy of information provided and enhance the overall user experience.

Syariah Court (SYC) & Registry of Muslim Marriages (ROMM): Public Query Management

SYC and ROMM were faced with a backlog of over 10,000 public queries. To ease their burden, we developed a proof-of-concept system, powered by a large language model, to provide first-cut responses. The system dramatically reduced response times and improved user satisfaction by ensuring timely and relevant answers to public queries.

Reusable Platforms for Common Needs

In implementing various AI projects, a number of key technical components have emerged as common necessities across different use cases.

Key Technical Components

- Essential for understanding and generating human-like text in tools like the Social Report Companion.

- Combines retrieval-based methods with generative models to handle extensive knowledge bases efficiently.

- Core to generating high-quality and contextually appropriate content, especially when engaging the public.

- Crucial for all projects, ensuring sensitive information is handled securely.

- Seamless integration with existing IT infrastructures and databases is a common requirement across all projects.

- Different agencies need tools that can be easily customised to meet their specific needs without extensive re-engineering.

Natural Language Processing (NLP)

Retrieval-Augmented Generation (RAG)

Large Language Models (LLMs)

Data Protection and Security

Integration with Existing Systems

Customisation and Flexibility

Recognising these commonalities has led us to develop reusable platforms, enabling us to streamline development and deployment processes across different applications and to maximise efficiency and scalability.

By focusing on reusable platforms, we maximise the impact of our AI initiatives, ensuring that every agency benefits from the latest advancements while minimising duplication of effort and resources. This strategic approach accelerates the deployment of effective AI solutions and fosters a culture of innovation and collaboration across the Public Service.

Reusable Platforms

Smart Compose @smartcompose.gov.sg

SmartCompose uses LLMs to help draft content more efficiently, with features to ensure quality control and handling of PII. NLP and LLM modules can be adapted for various content generation tasks across different agencies such as copywriting of marketing material.

Since its launch, SmartCompose has been used to generate 12,850 emails in 90 agencies, cutting the average time officers need to draft an email reply from 20 minutes to 5.4 minutes.

AIBots @aibots.gov.sg

AIBots uses RAG to create smart chatbots that can access and use information from agencies’ internal databases to provide accurate and relevant answers to queries. The RAG framework ensures that the chatbots can be tailored using each agency's own data as a knowledge base, without the need for expensive and complicated fine-tuning or training of the AI models.

Reusable platforms maximise the impact of AI initiatives, ensuring that every agency benefits from the latest advancements while minimising duplication of efforts and resources.

Building Capabilities to Leverage AI Safely

All new technologies are unpredictable when they first emerge. We must continually experiment with gen AI to test its boundaries and uncover any undesirable side-effects. The most common problem with gen AI is its tendency to hallucinate: when it produces wrong or nonsensical results that are presented confidently as correct. Approaches to limit gen AI's hallucinations have been well documented and discussed: we have applied them to our own products.

However, since gen AI is now widespread and commonplace, the public and our citizens are exposed to its risks. We went one step ahead to see if we could do anything about them. Specifically, we wanted to see if we could target other risk areas beyond hallucination, such as:

* Toxicity: when LLMs generate hateful, explicit, threatening or sexual content in response to user prompts; * Harm: when LLMs encourage dangerous behaviour, including self-harm or criminal acts.There are benchmarks to evaluate the effectiveness of safeguards provided by most closed-sourced LLMs, such as OpenAI’s ChatGPT and Anthropic’s Claude. However, these benchmarks are usually based on the context in which the LLMs are generated and used, which tend to be specific to North America or Europe. They are therefore less able to detect content specific to Singapore (such as content written in Singlish). While it is not entirely possible to fully prevent all toxic or harmful outputs from LLMs, we thought that building a moderation classifier that could detect unsafe output would help filter out the most harmful content.

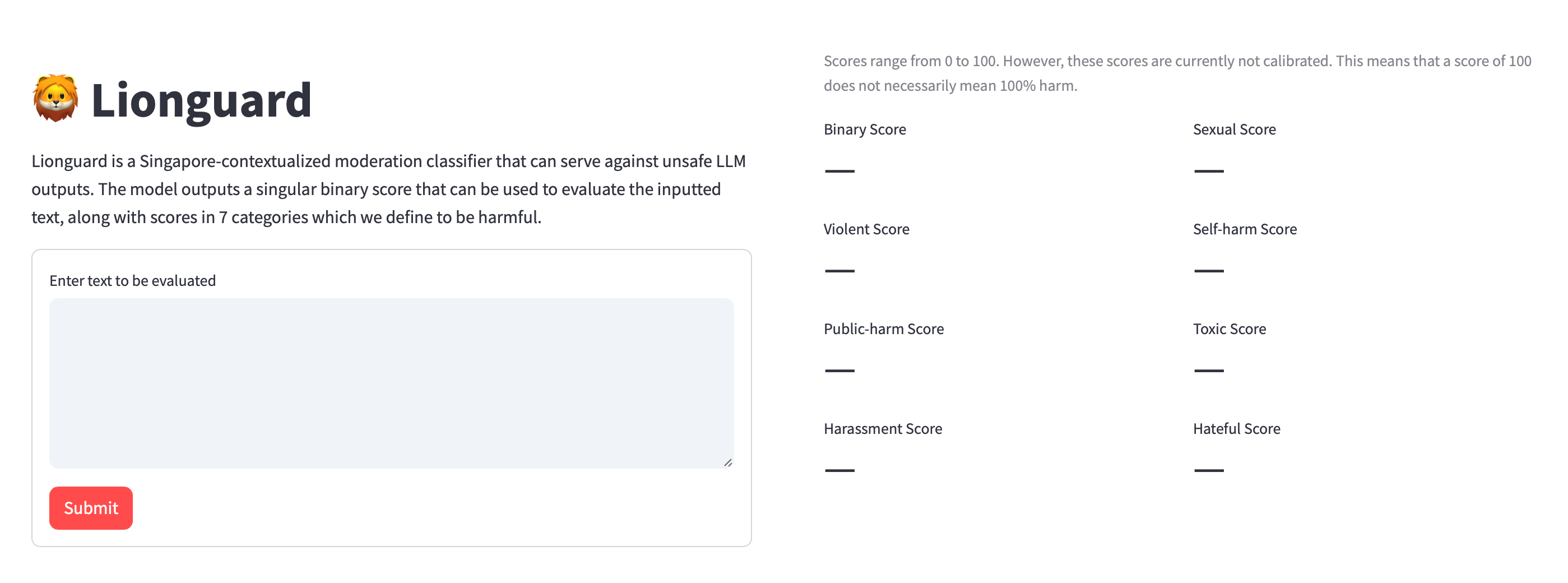

After a few months of testing, we created a moderation classifier tool called LionGuard, which has already outperformed commercial-off-the-shelf moderation APIs on texts that include Singlish content. On areas that are labelled hateful and toxic, LionGuard demonstrated a significant improvement in detection.

Towards the Next Iteration

As GovTech’s Chief Technology Officer, I encourage all government agencies to collaborate with us to experiment with AI technologies.

By partnering with GovTech, agencies gain access to a wealth of expertise, resources, and infrastructure dedicated to driving innovation in the public sector. Our teams of engineers are at the forefront of AI research and development, equipped with the knowledge and experience to guide agencies through the complexities of AI experimentation. Working with GovTech will allow agencies to leverage economies of scale, reducing the cost and effort associated with conducting such experiments.

We welcome all ideas and participants to work together with us in Engineering Digital Government, Making Lives Better.