AI: Cybersecurity Friend or Foe?

ETHOS Issue 27, January 2025

Even as Artificial Intelligence (AI) continues to capture the public’s imagination and gain wider adoption, the question of whether it is friend or foe continues to be a subject of significant debate. While AI promises to boost efficiency and innovation and drive us to the next bound of growth, it also risks significantly disrupting society, and may have unforeseen impacts on the digital divide. In this debate, it is important to be clear about the risks of AI, and what we are putting at stake. We cannot afford to overlook the inherent security risks to and of AI, as well as the impact AI may have on other security risks. There is also the risk that security concerns may undermine trust in broader AI use and in the benefits it could otherwise bring.

Is AI Secure?

AI is vulnerable to new and old risks

AI promises significant benefits in terms of productivity and decision-making. However, just like any other software, the adoption of new AI systems can introduce new risks or exacerbate existing ones for organisations. To maximise the benefits of AI, the technology has to be made safe and secure. This ensures that the output of these models is accurate, reliable, and will not harm users or systems.

First, an AI system is vulnerable to new risks which may not have been observed in traditional IT systems. Malicious actors can leverage a new class of threats known as adversarial machine learning (AML) to attack AI models, including data poisoning attacks to compromise the AI’s machine learning and training process, inference attacks to gain unauthorised access to sensitive or restricted data, or extraction attacks to steal the model itself. AML attacks could trigger models to produce inaccurate, biased, or harmful output; and/or reveal confidential information. This could undermine wider trust and confidence in whether AI is truly trustworthy and reliable.

Second, AI may also be attacked by classical cyber threats. AI is a complicated, expansive system of hardware, software, and information components. Attackers can target any part of this extensive ecosystem to disrupt our access and use of AI. For example:

-

- Copious amounts of enterprise data are required to train an AI model to

perform specific tasks. This makes it an attractive target to exploit.

Many AI systems are also connected to the internet, which provides

malicious actors with more vectors, and thus more opportunities, to

attack sensitive datasets.

-

- Organisations and users risk losing the ability to access and use AI tools

if there are disruptions to cloud services, data centre operations,

submarine cables, and other digital infrastructure. This would disable

systems that depend on AI tools to function.

AI can be misused to threaten security

While AI can uplift our productivity and economic growth, it could also be misused to provide malicious actors with devastating capabilities. There are legitimate concerns about the rise of AI-enabled threats to digital security—including AI-enabled cyberattacks, misinformation and disinformation campaigns, as well as scams.

Cybersecurity and AI firms have reported that threat actors are already misusing AI to boost the productivity of malicious workflows and will continue using AI to enhance the sophistication of their attack tactics, techniques, and procedures. In the near term, sophisticated actors could learn to use AI to develop polymorphic malware, which can be re-programmed to evade cybersecurity defences. In the future, we expect threat actors to train AI models to mount autonomous attacks that can adapt to the target or environment without human intervention.

In Singapore, we are also concerned about an enlarged risk of AI-enabled misinformation and disinformation and AI-enabled scams. This could include the malicious use of deepfakes, which could be used to influence elections, for instance. As generative AI models improve, it will be increasingly difficult to determine if content is authentic and trustworthy. This could have a long-term impact on how much members of the public trust information that is published and broadcasted online, and in the digital domain in general.

Users need to be assured that AI models will generate outcomes that are trustworthy and harm-free.

Singapore's Approach to Addressing AI Risks

Singapore aspires to be a pacesetter: a global leader in choice AI areas that are economically impactful and that serve the public good. To reap the full benefits of AI, users must have confidence that the technology is safe and secure and will behave as designed. Users need to be assured that AI models will generate outcomes that are trustworthy and harmfree. To support these goals, Singapore is investing in measures to understand AI security risks, and how to manage them. This helps us ensure that we can address risks from the potential abuse or mismanagement of AI as early as possible, to foster a trusted AI environment that protects users and facilitates innovation.

Nationally, we have launched an initiative called the AI Verify Foundation (aiverifyfoundation.sg), harnessing the expertise of the global open-source community to promote the development of responsible AI testing tools and capabilities. This will give users and enterprises more assurance that AI systems can meet the needs of companies and regulators, regardless of their jurisdiction. This effort is led by IMDA, and there are more than 60 members in the Foundation, including organisations such as IBM, Google, Sony, Deloitte, DBS and SIA, who share our interest in protecting AI.

Introduced by IMDA in 2024, the Model AI Governance Framework for Generative AI (MGF-GenAI) sets out best practices for stakeholders to manage the risks posed to users. The framework expands upon IMDA's current Model Governance Framework for Traditional AI, which was last updated in 2020. It articulates emerging principles, concerns, and technological developments relevant to the governance of Generative AI and provides a starting point for what stakeholders can do to manage the risks. Security is a core element in MGF-GenAI, and the framework provides guidance on how to address new threat vectors to security, which may arise through Generative AI models.

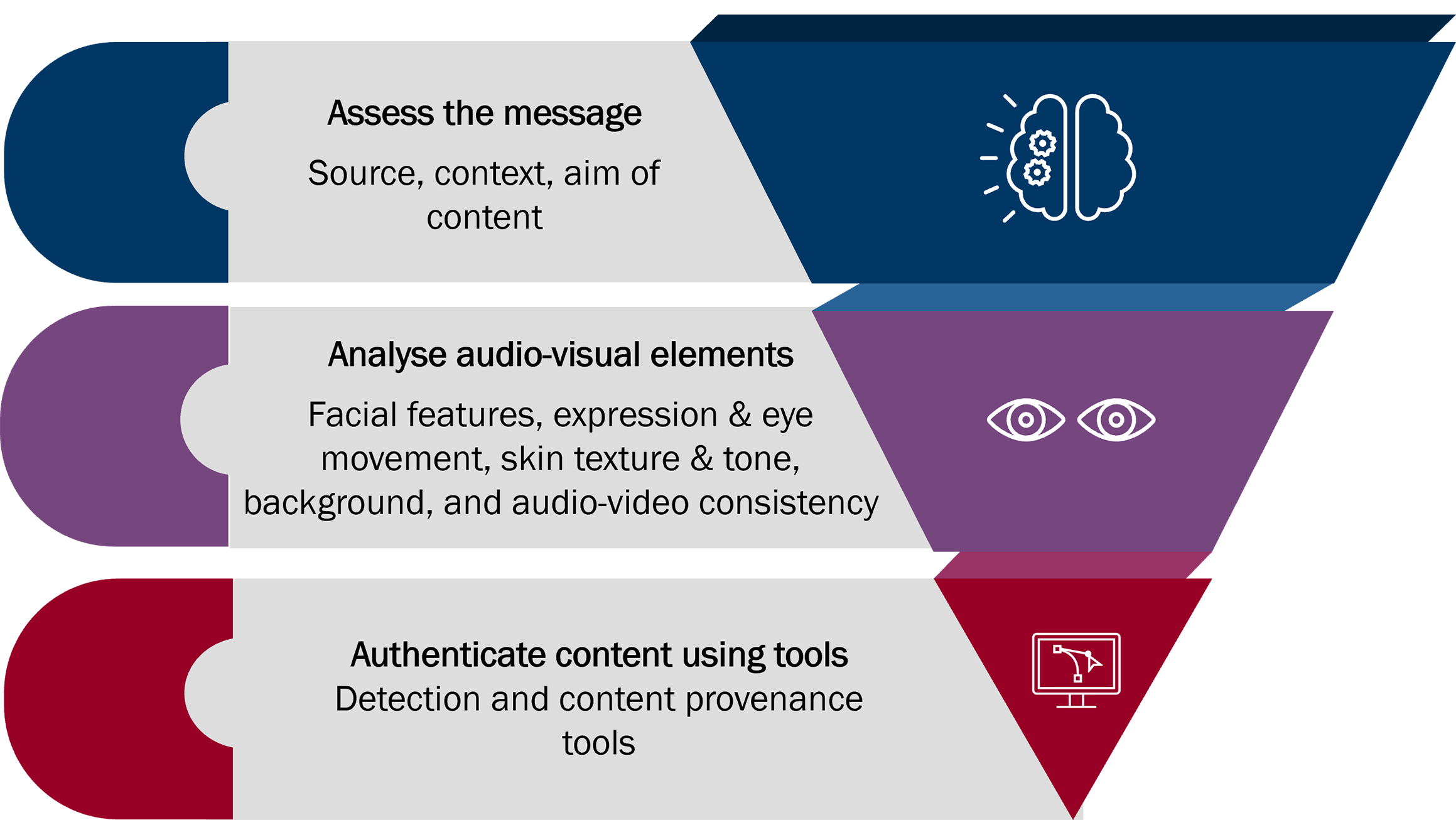

CSA will continue to drive national efforts to uplift the security baseline for AI. We are working with industry and international partners to develop guidelines, codes of practice and standards that will support system owners and adopters to make informed decisions about their adoption and deployment of AI. In November 2023, CSA co-sealed the “Guidelines for the Security of AI” with the UK, US and other key partners, which sets out security guidelines and measures for developers and system owners. For scams involving the use of deepfakes, SPF and CSA have also issued advisories to the public, to raise public awareness about the risks of deepfake scams, how to identify them, and what to do next. For instance, CSA released an advisory in March 2024 to help members of the public spot deepfake scams using a simple ‘3A’ approach (see Figure 1).

As AI technology continues to advance, we also expect it to be more widely adopted across all industries in Singapore. Hence, CSA is actively engaging industry to co-create solutions for AI security, and to fine-tune our approach to securing the adoption of AI. CSA will continue to support the ecosystem with more tools, services, and guidelines to establish baseline assurance in the security of their AI systems. CSA is also building up R&D capabilities to ensure Singapore remains AI resilient. The Government will continue to develop and refine its AI security standards and support national capability development in AI security.

Harnessing AI's Potential in Cybersecurity

We should continue to regard AI as a technology with significant potential that can be harnessed for good. While we address the risks that malicious actors may abuse AI to empower their attacks, we should also ensure that our cyber defenders can harness AI to fend them off. For cyber defenders, AI has already brought about revolutions in how we address security threats and will continue to influence how this space evolves.

If used well, AI can be a force multiplier to help relieve operator workload and counter the increased scale and sophistication of attacks. AI enables new possibilities for the innovation of cybersecurity solutions, with greater agility, speed, and accuracy. This can help cyber defenders level the playing field, and allow them to identify risks with greater speed, scale, and precision. By efficiently handling tedious routine cybersecurity tasks, analysing large volumes of system logs, and automatically patching vulnerable systems, operators will be able to focus on higher-value work.

We have already seen AI being used to accelerate anti-scam operations. SPF and GovTech are using AI to accelerate and expand SPF’s operations to detect and block scam websites. AI can help with a preliminary assessment of the potential threat posed by a given website, reducing the load on each Police officer. CSA and GovTech have taken early steps to review how AI can be used to accelerate our cybersecurity operations, at the national level. This is especially important for Singapore, which will continue to face the impact of a shrinking resident labour force.

AI: Both Friend and Foe

AI is likely here to stay. Labelling it “friend” or “foe” is a false dichotomy. We need to be clear-eyed and see the full spectrum of risks and opportunities that AI can bring. Fundamentally, technology is a tool that can be used for good or misused for harm. While it could potentially bring harm to users or introduce new vulnerabilities and risks to systems dependent on AI, it can also enable significant advances in our defensive capabilities. Therefore, Singapore must develop a concerted strategy that deals with the risks and maximises the value that AI brings to our economy, society, and security.

Everyone has a part to play in ensuring the safety and security of our AI-enabled future. The Government, industry, and the public must collaborate and work together to make AI more friend than foe.